cascadeforwardnet

Generate cascade-forward neural network

Description

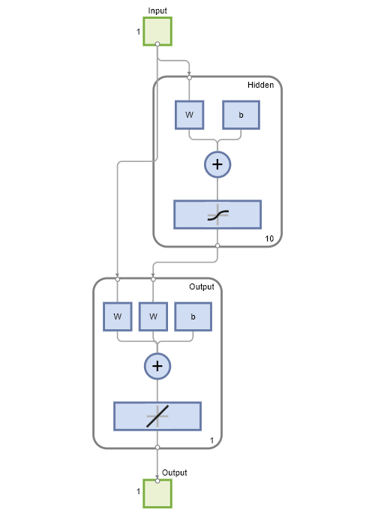

net = cascadeforwardnet(hiddenSizes,trainFcn)hiddenSizes and training function, specified by

trainFcn.

Cascade-forward networks are similar to feed-forward networks, but include a connection from the input and every previous layer to following layers.

As with feed-forward networks, a two-or more layer cascade-network can learn any finite input-output relationship arbitrarily well given enough hidden neurons.

Examples

Input Arguments

Output Arguments

Version History

Introduced in R2010b